本作品采用知识共享署名-非商业性使用-相同方式共享 4.0 国际许可协议进行许可。

本作品采用知识共享署名-非商业性使用-相同方式共享 4.0 国际许可协议进行许可。

本作品 (李兆龙 博文, 由 李兆龙 创作),由 李兆龙 确认,转载请注明版权。

引言

性能优化之路道阻且长,因为脱敏规定,此文记录一个问题排查的简化过程。

问题背景

一个业务的查询绝大多数查询如下所示:

SELECT max(field) FROM m WHERE ((a = ‘1254801811’ AND b = ‘’ AND c = ‘lzl’ AND d = ‘lzl2’ AND e = ‘’ AND f = ‘’ AND g = ‘5702962’ AND h = ‘20466’) OR (a = ‘1254801811’ AND b = ‘’ AND c = ‘lzl’ AND d = ‘lzl2’ AND e = ‘’ AND f = ‘’ AND g = ‘5702962’ AND h = ‘20467’) OR (a = ‘1254801811’ AND b = ‘’ AND c = ‘lzl’ AND d = ‘lzl2’ AND e = ‘’ AND f = ‘’ AND g = ‘5702962’ AND h = ‘20468’) OR (a = ‘1254801811’ AND b = ‘’ AND c = ‘lzl’ AND d = ‘lzl2’ AND e = ‘’ AND f = ‘’ AND g = ‘5702962’ AND h = ‘20469’) OR (a = ‘1254801811’ AND b = ‘’ AND c = ‘lzl’ AND d = ‘lzl2’ AND e = ‘’ AND f = ‘’ AND g = ‘5702962’ AND h = ‘20470’) OR (a = ‘1254801811’ AND b = ‘’ AND c = ‘lzl’ AND d = ‘lzl2’ AND e = ‘’ AND f = ‘’ AND g = ‘5702962’ AND h = ‘20471’) OR (a = ‘1254801811’ AND b = ‘’ AND c = ‘lzl’ AND d = ‘lzl2’ AND e = ‘’ AND f = ‘’ AND g = ‘5702962’ AND h = ‘20472’) OR (a = ‘1254801811’ AND b = ‘’ AND c = ‘lzl’ AND d = ‘lzl2’ AND e = ‘’ AND f = ‘’ AND g = ‘5702962’ AND h = ‘20473’) OR (a = ‘1254801811’ AND b = ‘’ AND c = ‘lzl’ AND d = ‘lzl2’ AND e = ‘’ AND f = ‘’ AND g = ‘5702962’ AND h = ‘20474’) OR (a = ‘1254801811’ AND b = ‘’ AND c = ‘lzl’ AND d = ‘lzl2’ AND e = ‘’ AND f = ‘’ AND g = ‘5702962’ AND h = ‘20475’)) AND time >= 477088h AND time <= 28626779m GROUP BY time(1h), a, b, c, d, e, f, g, h fill(none)

内部发现这种sql在多条并发执行时不但执行时间较长,而且会吃满节点cpu,因为内部存在限流,读读分离等逻辑,这种行为会导致大量排队,从而一段时间内查询平均时延从20ms升高到1000ms,严重影响业务。

最终经过一系列的定位,我们定位到瓶颈在 e = '' 的条件筛选上。

时序数据库中需要基于条件去做时间线的筛选,在得到最终时间线后去实际的数据文件中去获取数据。等于空和不等于空这样的条件相对于c = 'lzl'的条件查询大不相同。前者需要首先定位到所有tagkey所有的时间线,然后获取measurement所有的时间线,最后求差集才可以。因为引擎实现的原因,并没有在索引中存储tagkey所属的全部时间线,而是存储tag的所有tagvalue对应的所有时间线,所以在求tagkey所属的全部时间线时需要做一个交集。而e的tagvalue数量在10w级别。

tagkey所属tagvalue的合并逻辑类似下述代码:

func (s *SeriesIDSet) Merge(others ...*SeriesIDSet) {

bms := make([]*Bitmap, 0, len(others)+1)

s.RLock()

bms = append(bms, s.bitmap)

s.RUnlock()

for _, other := range others {

other.RLock()

defer other.RUnlock()

bms = append(bms, other.bitmap)

}

result := FastOr(bms...)

s.Lock()

s.bitmap = result

s.Unlock()

}

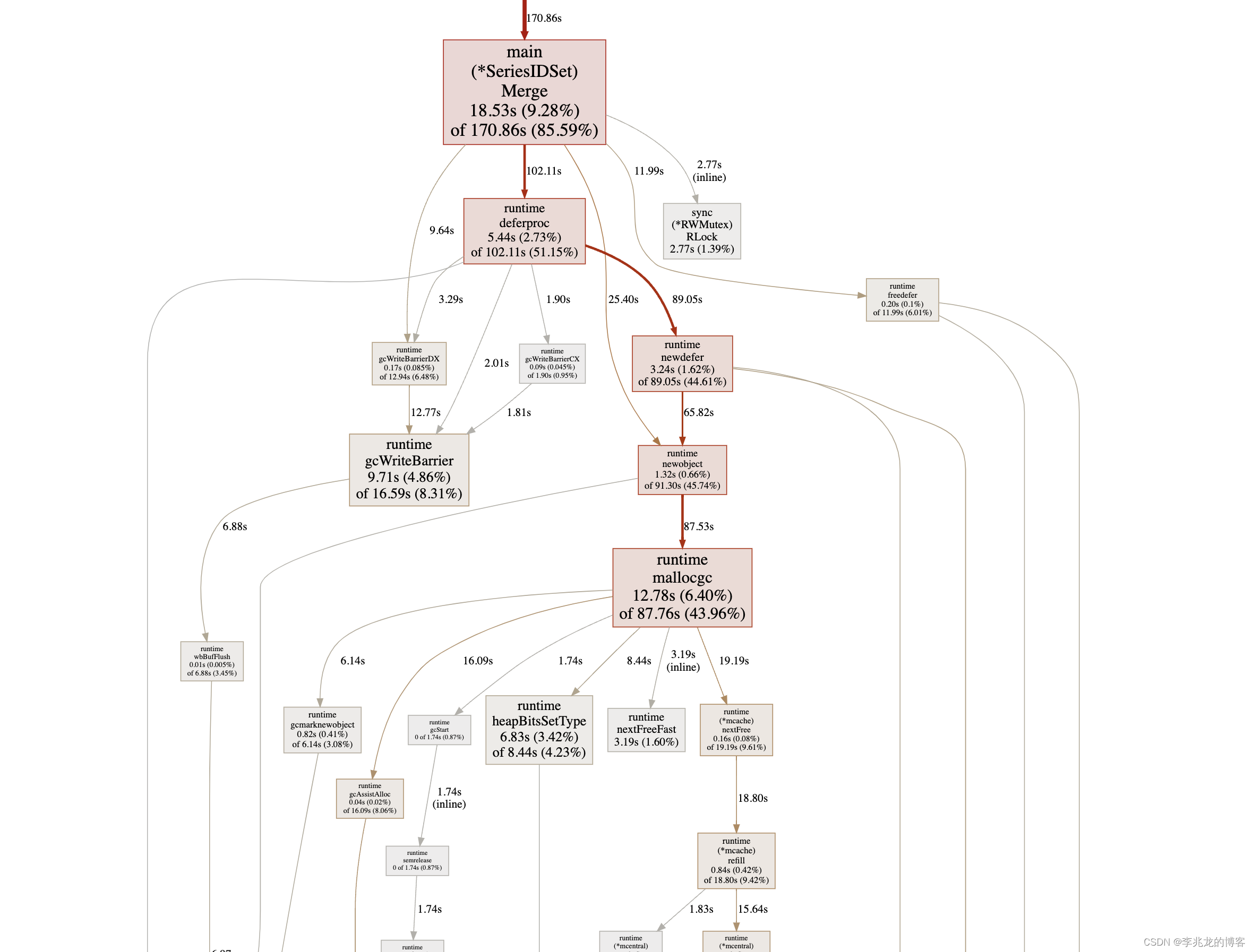

可以看到当others过多时,会存在相当多的defer函数,在排查中我们发现这个函数的瓶颈来自于defer。

当defer在函数内在8个以上时,会使用堆上分配的方式[2],在允许时执行runtime.deferproc,runtime.deferproc 中 runtime.newdefer 会基于go本身的内存分配器获得runtime._defer结构体,这里包含三种路径:

- 从调度器的延迟调用缓存池

sched.deferpool中取出结构体并将该结构体追加到当前 Goroutine 的缓存池中; - 从 Goroutine 的延迟调用缓存池

pp.deferpool中取出结构体; - 通过

runtime.mallocgc在堆上创建一个新的结构体;

然后将runtime._defer插入Goroutine _defer 链表的最前面。

在函数结束时runtime.deferreturn 会从 Goroutine 的 _defer 链表中取出最前面的runtime._defer 并调用 runtime.jmpdefer 传入需要执行的函数和参数。

当defer在函数内在8个以下时,在Go 1.14及之后的版本会使用open coded defer[1],简单讲就是会把defer的内容拷贝到栈上,并给函数准备一个FUNCDATA,这样就不存在对象的申请和释放了,性能相当优秀。

在函数内部defer过多时,会大量触发runtime.mallocgc ,用于分配defer对象。

下面的代码模拟了现网场景以及对应优化:

package main

import (

"sync"

"time"

"fmt"

"net/http"

_ "net/http/pprof"

)

type SeriesIDSet struct {

sync.RWMutex

bitmap *Bitmap

}

type Bitmap struct{

}

func FastOr(bitmaps ...*Bitmap) *Bitmap {

time.Sleep(10 * time.Millisecond)

return &Bitmap{

}

}

func (s *SeriesIDSet) Merge(others ...*SeriesIDSet) {

bms := make([]*Bitmap, 0, len(others)+1)

s.RLock()

bms = append(bms, s.bitmap)

s.RUnlock()

for _, other := range others {

other.RLock()

defer other.RUnlock()

bms = append(bms, other.bitmap)

}

result := FastOr(bms...)

s.Lock()

s.bitmap = result

s.Unlock()

}

func (s *SeriesIDSet) OptimizationMerge(others ...*SeriesIDSet) {

bms := make([]*Bitmap, 0, len(others)+1)

s.RLock()

bms = append(bms, s.bitmap)

s.RUnlock()

for _, other := range others {

other.RLock()

bms = append(bms, other.bitmap)

}

defer func() {

for _, other := range others {

other.RUnlock()

}

}()

result := FastOr(bms...)

s.Lock()

s.bitmap = result

s.Unlock()

}

func main() {

go func() {

http.ListenAndServe("localhost:6060", nil)

}()

mainSet := &SeriesIDSet{

bitmap: &Bitmap{

}}

var others []*SeriesIDSet

cardinality := 5000000

for i := 0; i < cardinality; i++ {

others = append(others, &SeriesIDSet{

bitmap: &Bitmap{

}})

}

startSerial := time.Now()

for i := 0; i < 50; i++ {

mainSet.Merge(others...)

}

elapsedSerial := time.Since(startSerial)

fmt.Printf("Cardinality %d, Serial Merge took %s\n", cardinality, elapsedSerial)

var wg sync.WaitGroup

startConcurrent := time.Now()

for i := 0; i < 50; i++ {

wg.Add(1)

go func() {

defer wg.Done()

mainSet.Merge(others...)

}()

}

wg.Wait()

elapsedConcurrent := time.Since(startConcurrent)

fmt.Printf("Cardinality %d, Concurrent Merge took %s\n", cardinality, elapsedConcurrent)

startConcurrent = time.Now()

for i := 0; i < 50; i++ {

mainSet.OptimizationMerge(others...)

}

elapsedConcurrent = time.Since(startConcurrent)

fmt.Printf("Cardinality %d, Serial OptimizationMerge took %s\n", cardinality, elapsedConcurrent)

startConcurrent = time.Now()

for i := 0; i < 50; i++ {

wg.Add(1)

go func() {

defer wg.Done()

mainSet.OptimizationMerge(others...)

}()

}

wg.Wait()

elapsedConcurrent = time.Since(startConcurrent)

fmt.Printf("Cardinality %d, Concurrent OptimizationMerge took %s\n", cardinality, elapsedConcurrent)

}

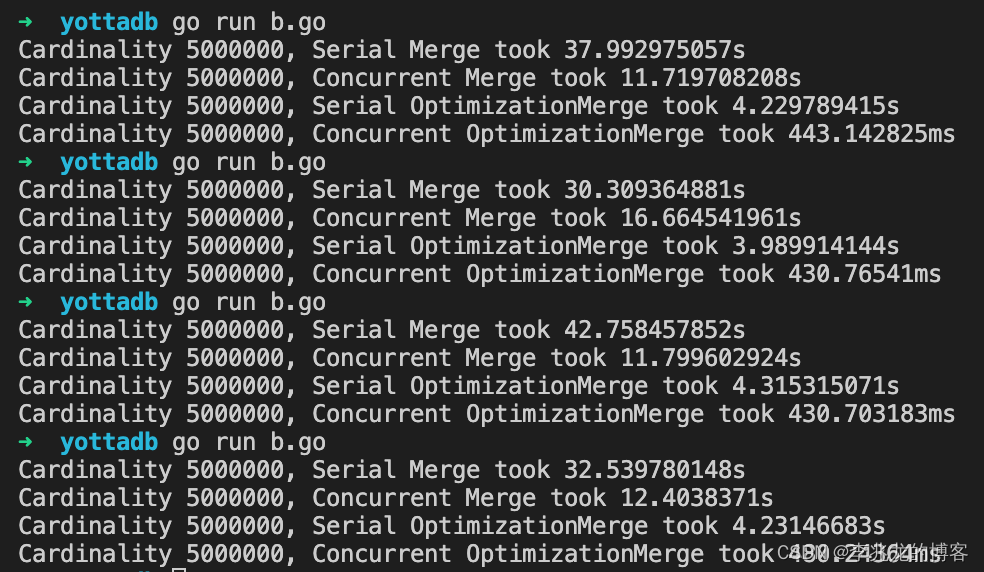

开发机器规格32c64g

可以看到在未优化defer的情况下,并行只比串行快了四倍不到,这证明对象申请的过程存在竞争,多个goroutine互相影响。也符合我们现网的观察。

在优化defer后,并行比串行快了近十倍。

仅串行优化前后性能也差了九倍。

未优化时cpu profile如下:

结论

这里当然只是在讨论defer。修改是顺便的事情,这只是e = ''的一部分,最终我们使用了更贴合业务的形式将查询性能优化了95%以上。

参考: